Table of contents

Security concerns stop many schools and organizations from adopting new technology. When it comes to AI Attendance Systems, these worries become even stronger. After all, we’re talking about facial recognition and biometric data. Parents want to know their children’s photos are safe. Teachers need assurance that the system protects their privacy.

The good news is that modern AI Group Attendance Systems take security very seriously. They use multiple layers of protection to keep data safe. Understanding how these security features work helps you make informed decisions about implementing this technology.

Understanding Biometric Data Security

Biometric data is special. Unlike passwords that you can change, your face stays the same. This makes biometric information highly sensitive. An AI Attendance System captures your facial image and converts it into a digital template. This template is not a photo. It’s a mathematical representation of your facial features. Think of it like a unique code that describes your face.

The system stores this template in an encrypted format. Even if someone accessed the database, they couldn’t recreate your actual photo from the template. This is the first layer of security that protects your identity. Quality systems never store raw photos in accessible formats. They process images immediately and save only the encrypted templates. This approach minimizes risk right from the start.

Encryption Protects Your Data

Encryption is like putting your data inside a locked safe. Only people with the correct key can open it and see what’s inside.

Modern AI Group Attendance Systems use industry-standard encryption protocols like AES-256. This is the same level of security that banks use to protect financial transactions. It’s virtually impossible to break without the encryption key.

The encryption happens at multiple stages. First, the system encrypts data when it captures your facial template. Second, it encrypts data during transmission from the device to the server. Third, it keeps data encrypted while stored in the database. Even administrators with access to the system cannot view the raw biometric data. They can only see attendance records and reports. The facial templates remain locked behind encryption.

Access Control Keeps Data Private

Not everyone in your organization needs access to biometric data. Smart AI Attendance Systems use role-based access control (RBAC) to limit who can see what. Teachers might access attendance records for their classes only. They cannot view data from other classes or teachers. Administrators see school-wide reports but not the underlying biometric templates.

The system maintains detailed logs of who accessed what data and when. This creates accountability and helps detect any suspicious activity. If someone tries to access data they shouldn’t, the system flags this attempt immediately. This tiered access structure follows the principle of least privilege. People get only the minimum access they need to do their jobs. This limits exposure and reduces security risks.

Preventing Fraud and Proxy Attendance

One major security benefit of AI Group Attendance Systems is their ability to prevent attendance fraud. Traditional methods are easy to cheat. Students answer for absent friends. Employees clock in for colleagues who are late. Facial recognition makes proxy attendance impossible. The system verifies your physical presence through your unique facial features. You cannot use a photo or video to fool advanced systems.

Modern systems include liveness detection technology. This feature ensures the person marking attendance is actually present and alive. It detects attempts to use printed photos, videos on phones, or masks. Some systems require users to blink their eyes during verification. This simple action proves you’re a real person, not a static image. These anti-spoofing measures provide accuracy rates up to 99.5%.

Legal Compliance and Regulations

Countries worldwide have recognized the sensitivity of biometric data. They’ve created strict laws governing how organizations can collect and use this information. In India, the Digital Personal Data Protection (DPDP) Act 2023 and Rules 2025 treat biometric data as sensitive personal information. These regulations align with global standards like Europe’s GDPR.

Organizations must obtain explicit consent before collecting biometric data. They must explain clearly why they’re collecting it and how they’ll use it. This transparency builds trust with users. The rules also mandate purpose limitation. Organizations can use biometric data only for the specific purpose stated during collection. An attendance system cannot use your facial data for marketing or other unrelated purposes.

Failure to comply with these regulations brings heavy penalties and reputational damage. This legal framework gives users protection and organizations clear guidelines to follow.

How DutyPar Ensures Security

DutyPar by IndoAi Technologies has built security into every aspect of their AI Attendance System. With over 1.5 million images processed daily across 6,000+ organizations, the platform has proven its reliability and security at scale.

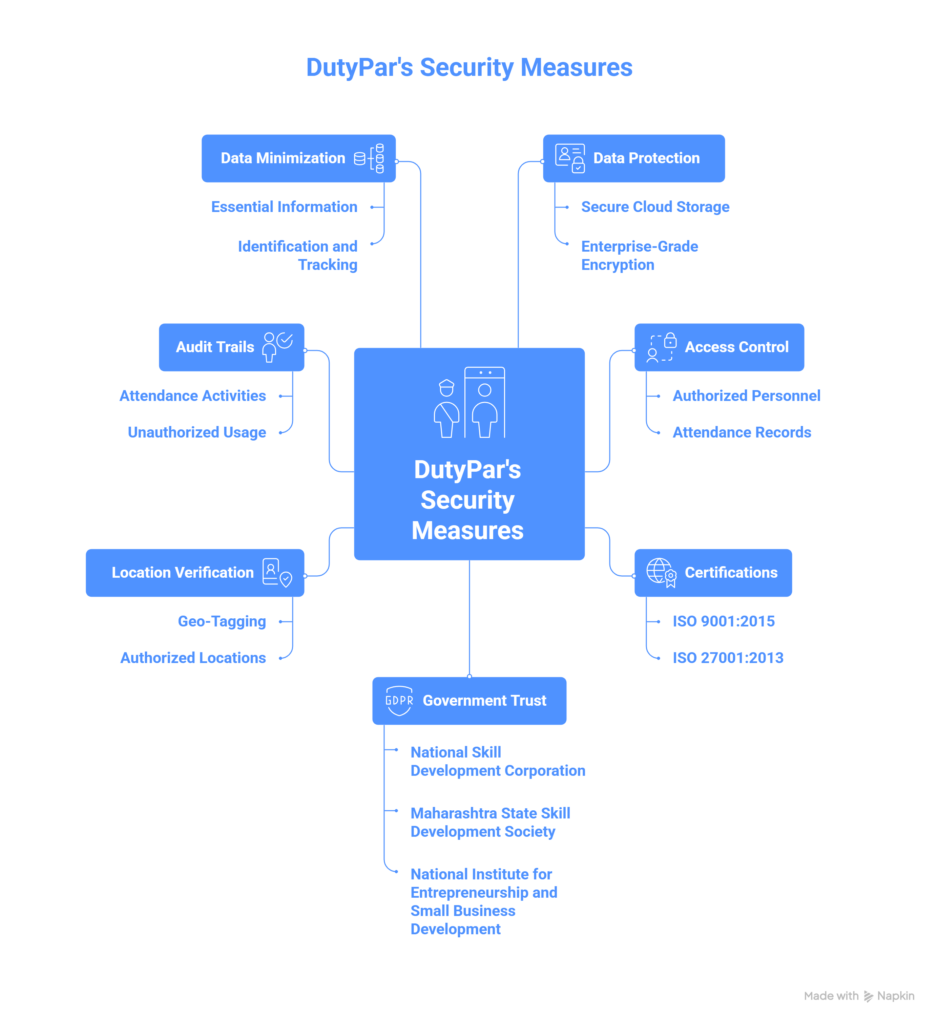

The system uses secure cloud storage with enterprise-grade encryption to protect all biometric data. This ensures that facial templates remain safe from unauthorized access. DutyPar implements strict access controls that allow only authorized personnel to view attendance records.

DutyPar has earned ISO 9001:2015 and ISO 27001:2013 certifications. These international standards verify that the company follows best practices for quality management and information security. The ISO 27001 certification specifically focuses on protecting sensitive data.

The platform’s security is trusted by prestigious government organizations. The National Skill Development Corporation, Maharashtra State Skill Development Society, and the National Institute for Entrepreneurship and Small Business Development all use DutyPar. These organizations have strict security requirements, and their trust demonstrates DutyPar’s commitment to protection.

DutyPar also implements location verification through geo-tagging. This ensures attendance gets marked only from authorized locations. Teachers and students cannot mark attendance from random places outside the school premises. The system maintains detailed audit trails of all attendance activities. Administrators can track when attendance was marked, by whom, and from which location. This transparency helps detect and prevent any unauthorized usage.

DutyPar follows data minimization principles by collecting only essential information for attendance purposes. The system doesn’t gather unnecessary personal details beyond what’s needed for identification and attendance tracking.

Comparing Security Features

The Bottom Line on Security

Modern AI Attendance Systems are highly secure when implemented correctly. They use military-grade encryption, access controls, physical security measures, and compliance with data protection laws. The security features actually exceed those of traditional attendance methods. Paper registers can be stolen, altered, or viewed by anyone. Manual systems have no audit trails or access controls.

Facial recognition technology itself provides strong security by preventing proxy attendance and time theft. The accuracy and fraud prevention capabilities enhance both security and reliability. Organizations like DutyPar demonstrate that these systems can be both highly functional and extremely secure. Their certifications, government clients, and large-scale deployment prove that security concerns can be effectively addressed.