A Complete Guide to Android Face Recognition API

Table of contents

- A Complete Guide to Android Face Recognition API

- What is the Android Face Recognition API?

- Why Use the Android Face Recognition API?

- How Android Face Recognition API Works

- Implementation: How to Use Android Face Recognition API

- Real-World Applications of Android Face Recognition API

- Best Practices for Implementing Android Face Recognition API

- Conclusion

Face recognition has become an essential feature in modern applications, ranging from security to user experience enhancements. Android developers can now leverage facial recognition capabilities using the Android Face Recognition API, simplifying complex biometric systems for mobile applications. In this article, we will explore the ins and outs of the Android Face Recognition API, its working principles, how to implement it, and its real-world applications. Whether you are a seasoned developer or just starting, this guide will walk you through the essentials of building face recognition features into your app.

What is the Android Face Recognition API?

The Android Face Recognition API is part of Google’s Mobile Vision API suite. It allows developers to detect, track, and recognize faces in real-time. This API is a powerful tool that simplifies the implementation of face detection systems in Android applications, which are often used for security purposes, user identification, and even in augmented reality experiences.

The API supports multiple functions, including:

- Face Detection: Locates the face within a photo or live camera feed.

- Face Tracking: Continuously tracks the face in real-time as the subject moves.

- Facial Feature Detection: Recognizes facial landmarks such as eyes, nose, and mouth.

- Recognition: Identifies a specific individual based on facial data.

Why Use the Android Face Recognition API?

The demand for secure authentication methods has significantly increased, and face recognition stands out as one of the most user-friendly biometric methods. Here are some reasons to consider using the Android Face Recognition API in your app:

- Security: With increasing data breaches and security threats, face recognition offers a reliable way to secure access to sensitive information or services.

- User Experience: Logging in or unlocking features using face recognition is faster and more convenient than traditional password-based systems.

- Customization: You can build custom face recognition models for your app, providing unique functionality that fits your specific use case.

- Efficient Resource Usage: Google’s face recognition technology is optimized for mobile devices, ensuring that it doesn’t consume too much processing power or battery life.

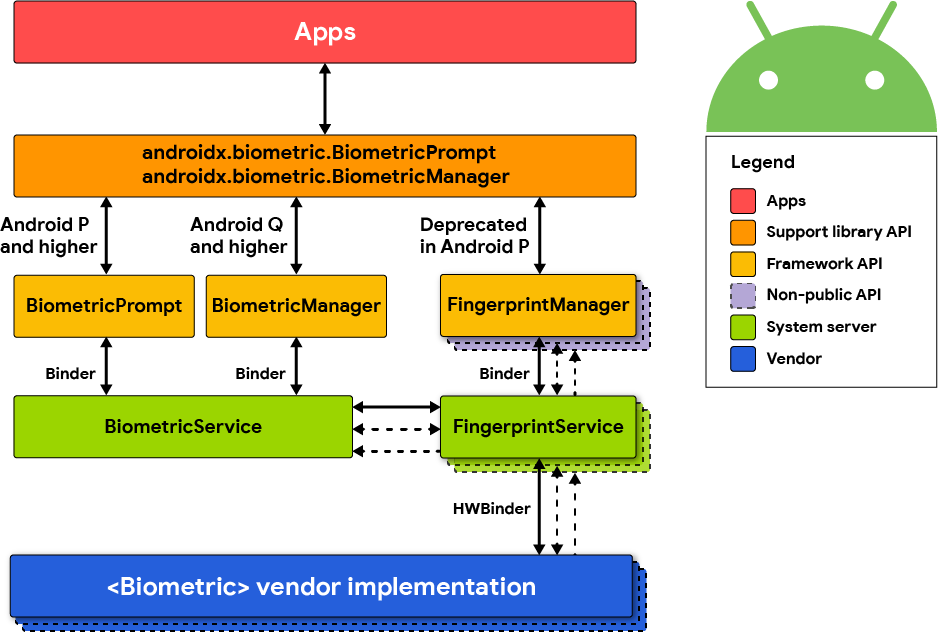

How Android Face Recognition API Works

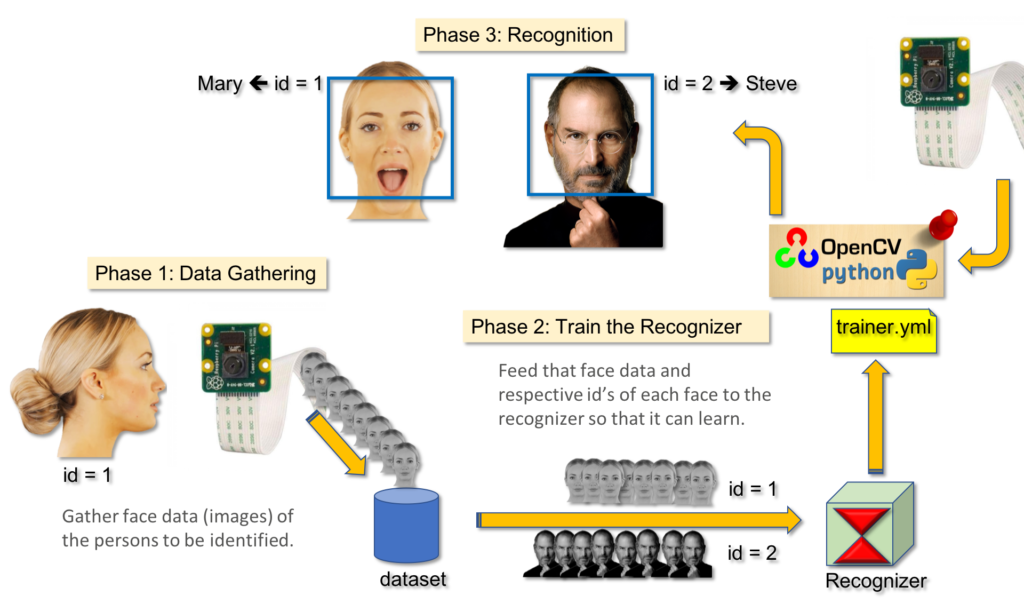

The Android Face Recognition API works by analyzing images or video streams to detect human faces and their features. The API breaks down the process into these basic steps:

- Input Image Processing: The API begins by scanning an image or video stream for potential human faces.

- Facial Feature Mapping: Once a face is detected, it maps facial features like eyes, nose, and mouth.

- Face Tracking: If a video stream is used, the API continues tracking the face as it moves within the frame.

- Face Recognition: Using pre-trained data, the API compares the detected face with known faces to identify individuals.

Implementation: How to Use Android Face Recognition API

Prerequisites

Before you start using the Android Face Recognition API, ensure that you have the following:

- Android Studio installed.

- A working knowledge of Java or Kotlin.

- A basic understanding of Android development.

- An Android device for testing purposes.

Step-by-Step Guide to Implement Android Face Recognition API

- Set Up Android Studio Project

Start by creating a new project in Android Studio. Ensure you have the latest Android SDK version installed for smooth API integration.

- Add the Mobile Vision API Dependency

The Android Face Recognition API is part of Google’s Mobile Vision API. To include it in your project, add the following dependencies to your build.gradle file:

gradleCopy codedependencies {

implementation 'com.google.android.gms:play-services-vision:20.1.3'

}

Sync the project to download the necessary files.

- Request Camera Permission

Since face recognition requires access to the device’s camera, you’ll need to request camera permissions in your AndroidManifest.xml:

xmlCopy code<uses-permission android:name="android.permission.CAMERA" />

Also, ensure that the app handles runtime permissions if targeting Android 6.0 (API level 23) or higher.

- Initialize Face Detector

In your activity or fragment, initialize the FaceDetector object provided by the Mobile Vision API. Here’s an example:

javaCopy codeFaceDetector faceDetector = new FaceDetector.Builder(context)

.setTrackingEnabled(true)

.setLandmarkType(FaceDetector.ALL_LANDMARKS)

.build();

- Create a Camera Source

Next, create a CameraSource that will feed live video data into the face detector:

javaCopy codeCameraSource cameraSource = new CameraSource.Builder(context, faceDetector)

.setFacing(CameraSource.CAMERA_FACING_FRONT)

.setRequestedPreviewSize(640, 480)

.setAutoFocusEnabled(true)

.build();

- Detect and Track Faces

Set up a detector processor to handle detected faces in real-time:

javaCopy codefaceDetector.setProcessor(new MultiProcessor.Builder<>(new GraphicFaceTrackerFactory()).build());

Code extracted from : Detect faces with ML Kit on Android

Implement the GraphicFaceTracker to detect facial features like eyes and nose, and track them in the video stream.

- Handling Recognition

For face recognition, the API itself does not directly provide individual identification. However, you can build your own database of known faces, extract unique facial features, and match those with the detected face.

Real-World Applications of Android Face Recognition API

The Android Face Recognition API is used in various industries for a multitude of purposes. Let’s look at some common applications:

- Security Systems Many apps, especially in banking and finance, use face recognition to secure user accounts and sensitive data. A user can authenticate their identity using their face rather than a password, reducing the risk of breaches.

- Attendance Systems Schools, workplaces, and event organizers have started adopting facial recognition-based attendance systems. The API can detect employees or students’ faces, ensuring accurate and quick attendance logs.

- Social Media Filters Platforms like Instagram and Snapchat use facial feature detection to create fun and interactive filters. By using the Android Face Recognition API, developers can build custom filters that enhance user engagement.

- Augmented Reality (AR) Face tracking is vital for augmented reality applications. For instance, AR-powered makeup apps allow users to try on cosmetics virtually, thanks to facial recognition technologies.

- Healthcare Face recognition is gaining traction in healthcare for patient identification, ensuring that medical records are correctly matched to the right individual.

Best Practices for Implementing Android Face Recognition API

While using the Android Face Recognition API, it’s essential to follow best practices to ensure optimal performance and user experience:

- Optimize for Low-Light Conditions: Face detection accuracy may reduce in poor lighting. You can prompt users to adjust lighting or increase camera exposure.

- Consider Privacy: Always be transparent about the use of facial recognition and seek consent from users. Store face data securely to prevent unauthorized access.

- Handle Multiple Faces: The API allows for detecting multiple faces in one frame. Make sure your app handles these situations gracefully, especially in group settings.

- Test on Different Devices: Since Android runs on a wide range of devices, test the feature on different models and screen sizes to ensure compatibility.

Conclusion

The Android Face Recognition API offers a powerful, flexible, and easy-to-use solution for developers looking to integrate facial recognition into their applications. Whether you’re building a security app, an AR experience, or something entirely new, this API provides all the tools you need. By following the implementation steps and best practices discussed in this article, you’ll be well on your way to creating a successful and engaging user experience.